About the Discovery API

Every time dbt Cloud runs a project, it generates and stores information about the project. The metadata includes details about your project’s models, sources, and other nodes along with their execution results. With the dbt Cloud Discovery API, you can query this comprehensive information to gain a better understanding of your DAG and the data it produces.

By leveraging the metadata in dbt Cloud, you can create systems for data monitoring and alerting, lineage exploration, and automated reporting. This can help you improve data discovery, data quality, and pipeline operations within your organization.

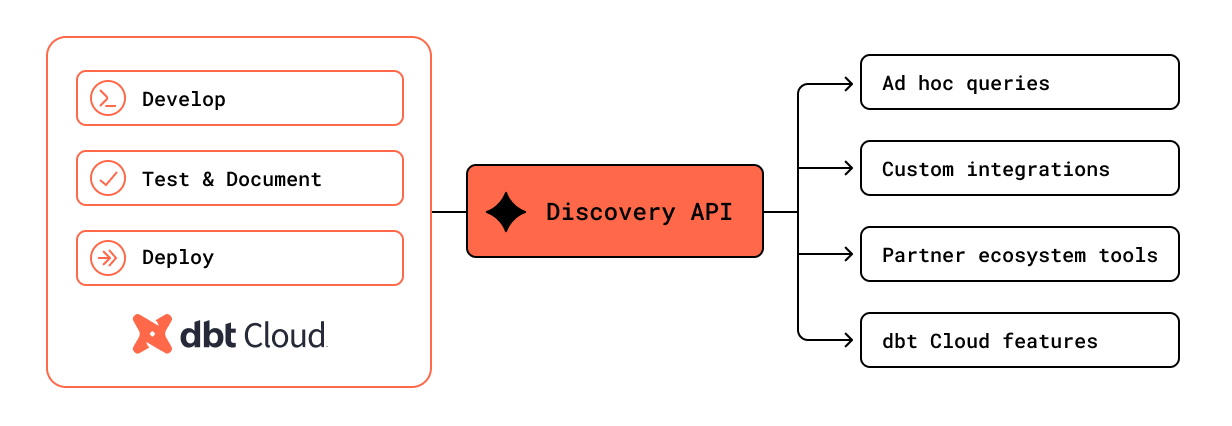

You can access the Discovery API through ad hoc queries, custom applications, a wide range of partner ecosystem integrations (like BI/analytics, catalog and governance, and quality and observability), and by using dbt Cloud features like model timing and [data health tiles]/(docs/collaborate/data-tile).

You can query the dbt Cloud metadata:

- At the environment level for both the latest state (use the

environmentendpoint) and historical run results (usemodelByEnvironment) of a dbt Cloud project in production. - At the job level for results on a specific dbt Cloud job run for a given resource type, like

modelsortest.

Prerequisites

- dbt Cloud multi-tenant or single tenant account

- You must be on a Team or Enterprise plan

- Your projects must be on dbt version 1.0 or later. Refer to Upgrade dbt version in Cloud to upgrade.

What you can use the Discovery API for

Click the following tabs to learn more about the API's use cases, the analysis you can do, and the results you can achieve by integrating with it.

To use the API directly or integrate your tool with it, refer to Uses case and examples for detailed information.

- Performance

- Quality

- Discovery

- Governance

- Development

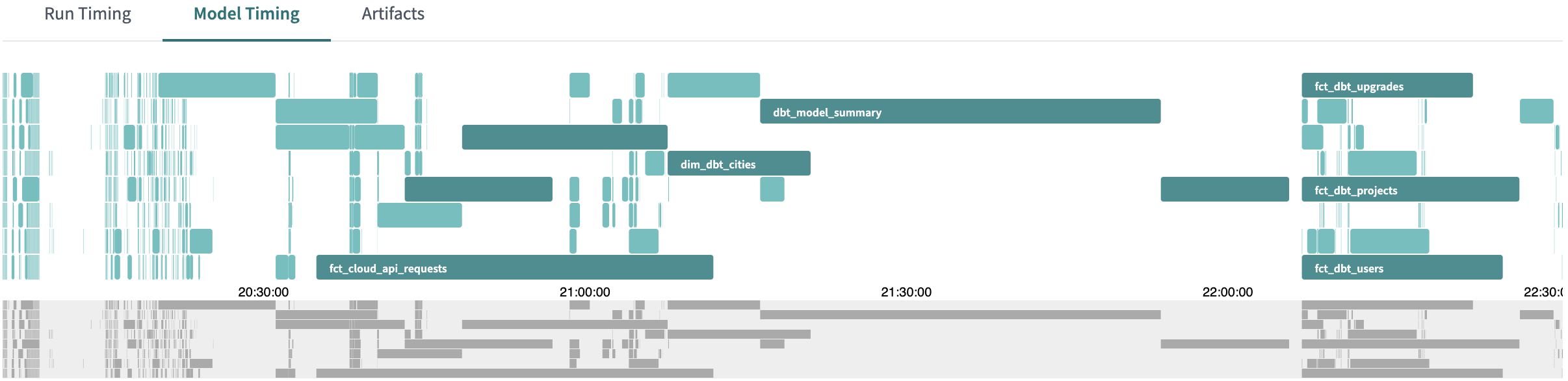

Use the API to look at historical information like model build time to determine the health of your dbt projects. Finding inefficiencies in orchestration configurations can help decrease infrastructure costs and improve timeliness. To learn more about how to do this, refer to Performance.

You can use, for example, the model timing tab to help identify and optimize bottlenecks in model builds:

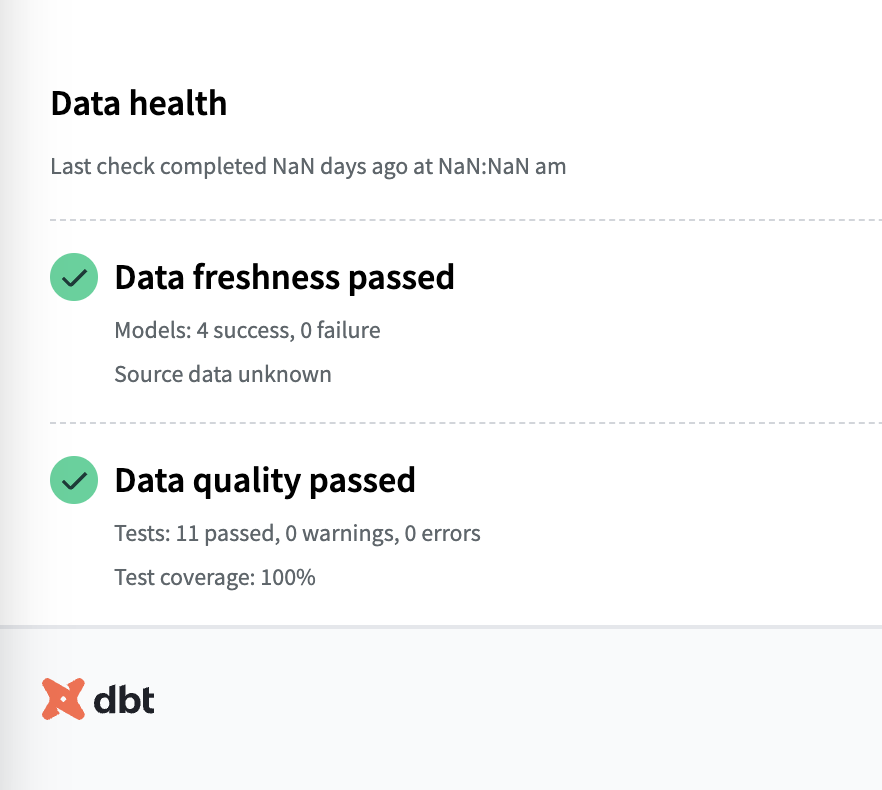

Use the API to determine if the data is accurate and up-to-date by monitoring test failures, source freshness, and run status. Accurate and reliable information is valuable for analytics, decisions, and monitoring to help prevent your organization from making bad decisions. To learn more about this, refer to Quality.

When used with webhooks, it can also help with detecting, investigating, and alerting issues.

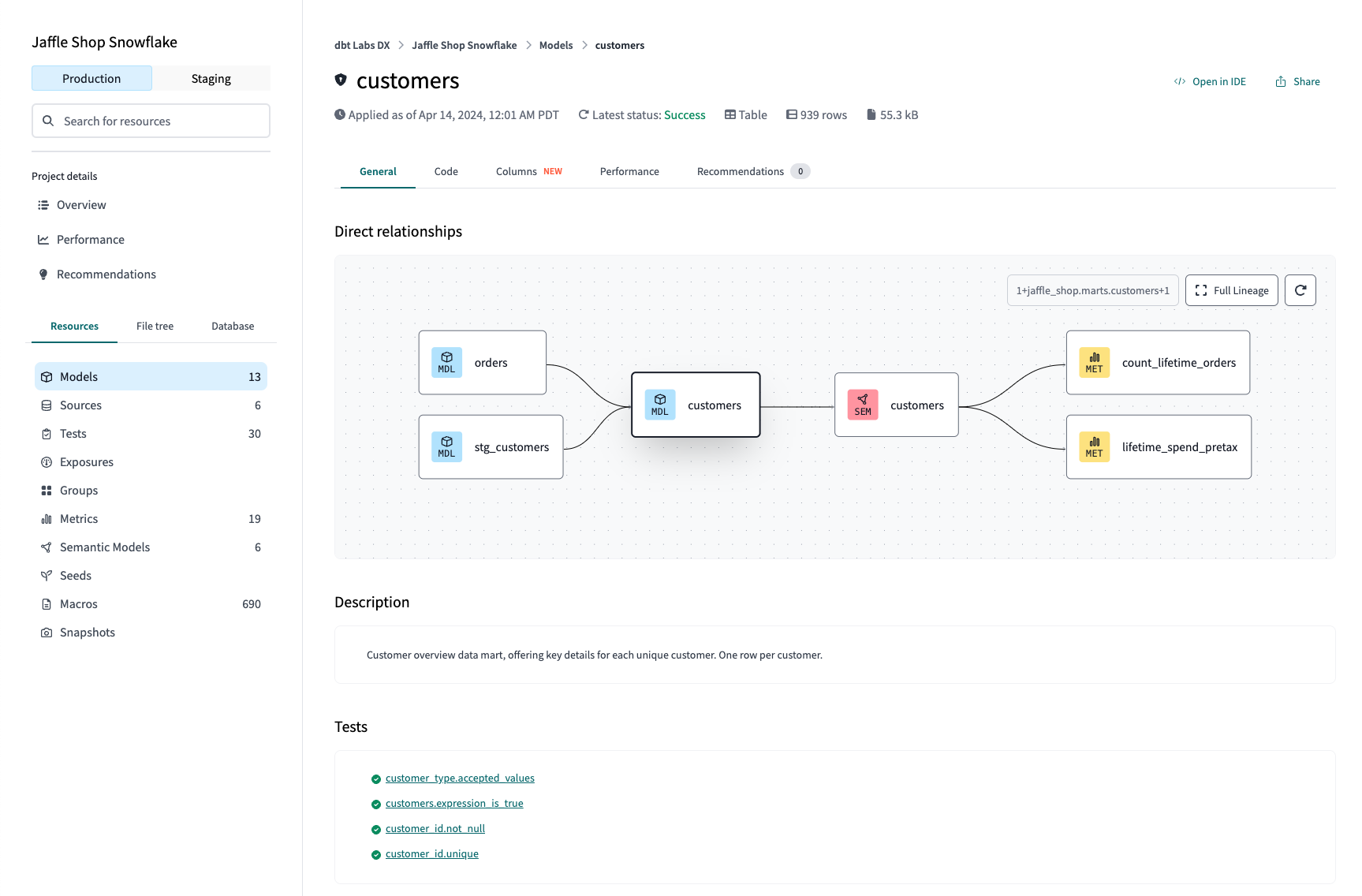

Use the API to find and understand dbt assets in integrated tools using information like model and metric definitions, and column information. For more details, refer to Discovery.

Data producers must manage and organize data for stakeholders, while data consumers need to quickly and confidently analyze data on a large scale to make informed decisions that improve business outcomes and reduce organizational overhead. The API is useful for discovery data experiences in catalogs, analytics, apps, and machine learning (ML) tools. It can help you understand the origin and meaning of datasets for your analysis.

Use the API to review who developed the models and who uses them to help establish standard practices for better governance. For more details, refer to Governance.

Use the API to review dataset changes and uses by examining exposures, lineage, and dependencies. From the investigation, you can learn how to define and build more effective dbt projects. For more details, refer to Development.

Types of project state

There are two types of project state at the environment level that you can query the results of:

- Definition — The logical state of a dbt project’s resources that update when the project is changed.

- Applied — The output of successful dbt DAG execution that creates or describes the state of the database (for example:

dbt run,dbt test, source freshness, and so on)

These states allow you to easily examine the difference between a model’s definition and its applied state so you can get answers to questions like, did the model run? or did the run fail? Applied models exist as a table/view in the data platform given their most recent successful run.